Regular Expressions, Text Normalization, Edit Distance

This post contains the notes on Regular Expressions, Text Normalization and Edit Distance which I covered as a part of doing NLP.

Regular Expressions

Regular Expression, often shortened to regex is a language for specifying text strings.

Basics

The simplese kind of regular expression is a sequence of simple characters; putting characters in sequence is called concatenation. For example, the regular expression /abc/ matches the string abc exactly. They are case sensitive; /abc/ does not match Abc or ABC. But this can be solved using square braces []. The string of characters inside the braces specifies a disjunction of characters to match. For instance /[Aa]bc/ matches Abc and abc.

To avoid having to write out all the characters in a disjunction, we can use dash(-) to specify any one character in a range. Following are a few examples of ranges:

| Regex | Matches |

|---|---|

/[0-9]/ |

Any digit |

/[a-z]/ |

Any lowercase letter |

/[A-Z]/ |

Any uppercase letter |

The same square braces can be used to specify what all characters cannot be there. For that we use caret(^). If the caret is the first character inside the square braces, it specifies a negated disjunction. For example, /[^0-9]/ matches any character that is not a digit.

Suppose one character is optional, we can use question mark (?) to specify that. For example, /ab?c/ matches ac and abc. The next trouble is, assume you want to extract cat language, which is meow!, meoow!, meooow!, …. :P . In that case we can use Kleene *. It means zero or more occurances of immediately preceding character. For example, /meoo*w/ matches meow, meoow, meooow, and so on. We have Kleene +, which means one or more occurances of immediately preceding character. This avoids writing the character ‘o’ twice. For example, /meo+w/ matches meow, meoow, and so on. Then we have a wildcard(/./) expression which matches any single character (except a carriage return).

Anchors are special characters that anchor regular expressions to particular places in a string. Most common ones are the caret(^) and the dollar sign($). The caret matches the beginning of a string, and the dollar sign matches the end of a string. For example, /^abc/ matches abc at the beginning of a string, and /abc$/ matches abc at the end of a string.

| Regex | Matches |

|---|---|

^ |

Beginning of string |

$ |

End of string |

\b |

Word boundary |

\B |

Non-word boundary |

The anchor /\bgeo\b\ will matches the word geo but not geology.

Disjunction, Grouping, and Precendence

We can use the pipe symbol (|) to specify a disjunction. For example, /a|b/ matches either a or b. But suppose we need to specify both geography and geology. In that case we can use grouping. For example, /geo(gra|log)y/ matches geography and geology. There is precdence in regular expressions. For example, /a|b|c/ matches a, b, or c. But /a|bc/ matches a or bc. The reason is that the pipe symbol has a lower precedence than the concatenation operator. The following table shows the precedence of the operators in regular expressions, from highest to lowest:

| Paranthesis | () |

| Counters | * + ? {} |

| Sequences and anchors | . ^ $ \b \B |

| Disjunction | | |

More Operators

The following table shows some aliases for common ranges.

| Regex | Expansion | Matches |

|---|---|---|

\d |

[0-9] |

Any digit |

\D |

[^0-9] |

Any non-digit |

\s |

[ \t\n\r\f\v] |

Any whitespace character (space, tab) |

\S |

[^ \t\n\r\f\v] |

Any non-whitespace character |

\w |

[a-zA-Z0-9_] |

Any alphanumeric character |

\W |

[^a-zA-Z0-9_] |

Any non-alphanumeric character |

A range of numbers can also be specified. REs for counting are summarized in the following table.

| Regex | Matches |

|---|---|

* |

Zero or more occurances of immediately preceding character |

+ |

One or more occurances of immediately preceding character |

? |

Zero or one occurances of immediately preceding character |

{n} |

Exactly n occurances of immediately preceding character |

{n,} |

At least n occurances of immediately preceding character |

{n,m} |

At least n and at most m occurances of immediately preceding character |

{,m} |

At most m occurances of immediately preceding character |

Then we have certain special characters referred to by special notations based on backslash (\).

| Regex | Matches |

|---|---|

\t |

Tab |

\n |

Newline |

\? |

Question mark |

\* |

Asterisk |

\. |

Period |

Substitution, Capure Groups

Substitution

It allows a string characterized by a regular expression to be replaced by another string.

Few examples for Substitution:

s/abc/xyz/replaces the first occurrence ofabcwithxyz.s/([0-9]+)/<\1>/replaces the first occurrence of a number with the number in angle brackets. eg: I have 2 cars become I have <2> cars./the (.*)er they were, the \1er they will be/Here this will match the bigger they were, the bigger they will be.

Capture Groups

The use of parantheses to store a pattern in memory is called a capture group. Every time a group is used the resulting match is stored in a numbered register. If you match two different set of parantheses, \2 means whatever matched the second capture group.

Then we have non-capturing group which is specified by putting (?:) after the open parantheses, in the form (?:pattern). This is used if we dont want to capture the resulting pattern in register.

Words

Terminologies

- Corpus - A computer-readable collection of text or speech.

- Utterance - Is the spoken correlate of a since. It has two kind of disfluencies.

- Fillers or filled pauses - Words like

um,uh,like,you know, etc. which are used to fill the silence. - Fragement - broken-off words like

main-and so on.

- Fillers or filled pauses - Words like

- lemma - It is a set of lexical forms having the same stem, the major part-of-speech, and the same word sense. Example:

run,ran,running,runsare all lemmas of the wordrun. - word-form - It is the full inflected form or derived form of the word.

- Types - It is the number of distinct words in a corpus.

Text Normalization

Before almost any naural language processing of a text, the text has to be normalized. At least three tasks are commonly applied as part of any normalization process:

- Tokenization -

- Normalizing word formats

- Segmenting sentences

Word Tokenization

A tokenizer can also be used to expand clitic contradictions that are marked by apostophes, for example, don't and can't are expanded to do not and can not. A clitic is a part of word that can’t stand on its own, and can only occur when it is attached to another word.

One commonly used tokenization standard is called Penn Treebank Tokenization standard. It seperates out clitics (doesn’t becomes does + n’t), keeps hyphanated words together, and seperates out all other punctuation.

Byte Pair Encoding

This kind of tokenization is helpful when dealing with unknown words. To deal this, tokenizers often automatically induce sets of tokens that include tokens smaller than words, called subwords. These words can be arbitrary substrings, or they can be meaning-bearing unit of a language. In modern tokenization most tokens are words, but some of them are frequently occuring morphemes (A morpheme is the smallest meaning-bearing unit of a language; for example the word unlikeliest has the morphemes un-, likely, and -est.) or other subwords like -er.

Most tokenization schemes have 2 parts: a token learner, and a token segmenter. Token learner takes a raw training corpus and induces a vocabulary, a set of tokens. The token segmenter takes a raw test sentences and segments it into the tokens of vocabulary. There algorithms are used widely:

- Byte-pair Encoding (BPE)

- unigram language modeling

- WordPiece

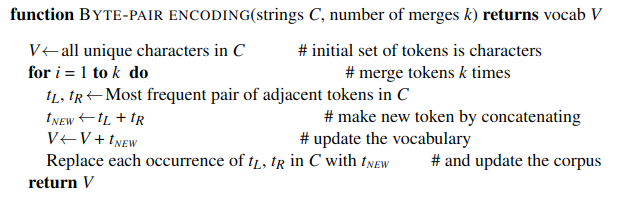

In BPE token learner begins with a vocabulary that is just the set of all individual characters. It then examines the training corpus, chooses the two symbols that are most frequently adjacent (say ‘A’ , ‘B’), adds a new merged symbol ‘AB’ to the vocabulary, and replaces every adjacent ‘A’‘B’ in the corpus with the new ‘AB’. It continues to count and merge, creating new longer and longer character strings, until k merges have been done creating k novel tokens. The resulting vocabulary consists of the original set of characters plus k new symbols.

Algorithm (BPE)

Once we finish learning the vocabulary, the token segmenter is used to tokenize a test sentence. The token segmenter just runs on test data the merges we have learned from the training data, greedily, in the order we learned them.

Word Normalization, Lemmatization and Stemming

Normalization

Word Normalization is the task of putting words/tokens in a standard format, choosing a single normal form for words with multiple forms like USA and US. This standardization may be valuable, despite the spelling information that is lost in the normalization process.

Case folding

It is a kind of normalization. Mapping all characters to lower case which is helpful for generalization in many tasks, such as information retrieval or speech recognition.

Lemmatization

It is the task of determining whether two words have the same root, despite their surface differences. The words dinner and dinners both have the lemma dinner. The most sophisticated methods for lemmatization involves complete morphological parsing of the words. Morphology is the study of the way words are built up from smaller meaning-bearing units called morphemes. Two broad classes of morphemes can be distinguished: stems - the central morpheme of the word, and affixes - adding additional meaning of various kinds.

The Porter Stemmer

It is a simple algorithm for removing the commoner morphological and inflexional endings from words.

Minimum Edit Distance

Much of NLP is concerned about measuring how similar two strings are. And for that we use edit distance. Formally, the Minimum Edit Distance between two strings is the minimum number of edit operations required to transform one string into the other. The edit operations are:

- Insertion - Insert a character into a string.

- Deletion - Delete a character from a string.

- Substitution - Replace a character in a string with another character.

Algorithm (MED)

The space of all possible edits is enormous. However, lots of distinct edit paths will end up in the same state, so rather than recomputing all those paths, we could just remember the shortest path to a state each time we saw it. This is called dynamic programming. The algorithm is as follows:

Given tow strings, the source string $X$ of length $n$ and the target string $Y$ of length $m$, we define a matrix $D$ of size $n+1 \times m+1$ where $D[i,j]$ is the minimum edit distance between the first $i$ characters of $X$ and the first $j$ characters of $Y$. The edit distance between $X$ and $Y$ is then $D[n,m]$. The algorithm is as follows:

In the base case, with a source substring of length $i$ but an empty target string, going from $i$ character to 0 requires $i$ deletes. With a target substring of length $j$ but an empty source string, going from $j$ characters to 0 requires $j$ inserts. Having computed the values for the base case, we can then compute larger $D[i, j]$ based on previously computed smaller values. The value of $D[i, j]$ is the minimum of the following three values:

\[D[i, j] = \min \begin{cases} D[i-1, j] + deleteCost(source[i]) \\ D[i, j-1] + insertCost(target[j]) \\ D[i-1, j-1] + replaceCost(source[i], target[j]) \end{cases}\]Implementation

The following is an implementation for the same:

int minDistance(string str1, string str2) {

int m = str1.length();

int n = str2.length();

int dp[m+1][n+1];

dp[0][0] = 0;

for(int i=1;i<=m;i++)dp[i][0] = dp[i-1][0]+1;

for(int i=1;i<=n;i++)dp[0][i] = dp[0][i-1]+1;

for(int i = 1; i <= m; i++){

for(int j = 1; j<= n; j++){

dp[i][j] = min({

dp[i-1][j] + 1, // delete

dp[i][j-1] + 1, // insert

dp[i-1][j-1] + (str1[i-1]!=str2[j-1]) // replace

});

}

}

return dp[m][n];

}

Here cost of delete, insert and replace are assumed to be 1, 1 and 1 respectively.